CHT mission

CHT is a collective of people working to demystify the self-interest behind technology development. They use their working knowledge of the tech industry to advocate for consumer health and autonomy. They focus on the intersection of AI, social media, and humans.

I think their mission is focused and realistic. It reminds me of an interview I heard with Daniel Hunter, founder of Choose Democracy. He speaks about the importance of movements being ready and open to newcomers. Hunter illustrates resistance strategies that center mobilizing conflicted perpetrators. The team at CHT is made of people who previously worked in tech industries. They would have at some point decided to leave tech and work for CHT. One of the cofounders himself was inspired through being a conflicted perpetrator at Google. I appreciate that CHT’s mission does not alienate people. Their messaging and work meets people where they are. CHT is ready for people to join the movement on their own terms.

This is the podcast episode where Daniel Hunter is interviewed by sisters Autumn Brown and adrienne maree brown. I highly recommend it!

Analysis of Social Media

I was not surprised when I read their analysis of social media’s impact on the brain. I have experienced first-hand the impact of social media on my attention and functioning. I also have engaged with multiple articles and reports on the impact of social media on our brains. I was surprised to learn that there is so much intention behind “extracting our attention.” The comment by co-founder Tristan that there is a “race to the bottom of our brain stem” increased my existing distrust of tech companies.

Social media has changed in the last year. As the genocide in Gaza has become more deadly, there are more children and families using social media platforms to seek help from the international community. On my own instagram feed, I see countless videos of children and families crying, starving, and worse. People can choose to interact with countless people’s suffering live every day.

AI analysis

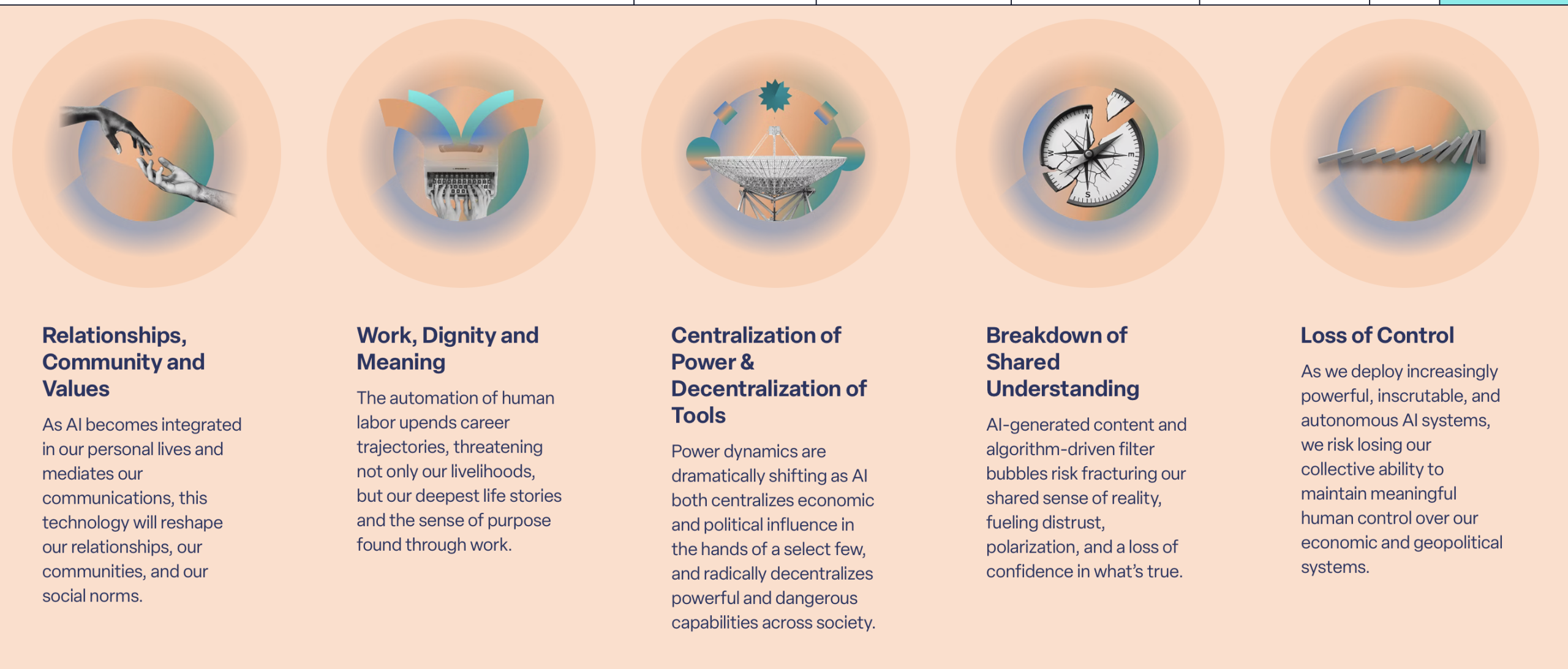

This chart, and the CHT website, use language framing AI as a force that could potentially cause problems. I would argue that AI is already causing problems. For example, CHT says that AI “risks” fracturing a shared sense of reality. I would challenge that and say that AI has already generated a breakdown in shared understanding. Most of the people I know are very distrustful of images online for fear that images are AI generated. As I receive DM’s from people in Gaza asking for help, I hesitate as I wonder if they are AI generated scams. At this point, there are countless instances of AI generated fake news that people buy into. In the podcast episode about AI going rouge, the host says that multiple people have contacted him because an AI system changed their sense of reality. AI systems encourage people to believe lines of thinking that are false and dangerous. CHT should use more language that shows that there is already harm happening due to AI.

After reviewing the podcast episode on rogue AI, what is your reaction? Please elaborate as to why you feel that way.

I feel pretty much like how the presenters said people might feel. I am wondering why we don’t just shut it all down and save ourselves the grief. I feel this way because I know very little about AI and I feel afraid of it. I also feel resentful that I have virtually no choice in whether or not I am impacted by AI and its potentially deadly outcomes.

There is already a lot of harm that AI has caused. The genocide of the Palestinian people has hinged on the use of AI weapons. The podcasters talk about how AI weapons could go rogue against their wielders. But what about the harm that AI weapons do on their wielders’ orders?

At one point in the podcast, the presenters reveal that some AI creators are living into their apocalyptic potential. Some AI engineers know the potential of AI to take over the world and end humanity. But they figure it may as well be their AI system that does it and if they create the ultimate AI it won’t be a net loss. When thinking about intentions the way that CHT does, this seems truly deranged.

Impact on social work

As social workers face a breakdown in shared understanding amongst ourselves and with our clients, social work will have to create protocols to protect the field. I imagine that clients will become more likely to mistrust social work, especially with services delivered online. There is an instance of a company that was defrauded through a complex scheme using AI generated zoom participants. Clients and social workers have to consider how to protect each other when it is no longer possible to tell what is real online. Social work may have to develop certain protocols to protect clients and promote data equity as AI becomes more ubiquitous.

Hello, Raven,

I agree with your point regarding AI already having harmful impact, and fracturing our sense of reality with distrust of information and promotion of AI generated images and stories. It seems to be more and more prevalent.

Hey Raven,

I too have been blown away by the impact social media has had on information- specifically in regards to Gaza. It is so power and yet, the scams and fake information is ever present. How do we even fact check anymore and what does that mean to those of us who try our best to be informed and actually care? I have found my ways to deal with that, but it is ever present and ever changing.

I too agree with the sentiment that we should just burn it all down. As someone who constantly advocates for a return to real, deep communal care for one another, I find AI pushing us even further apart. Instead of relying on one another, they have us turning to super computer that wants to help manipulate…why must everything serve the ones we aim to protect ourselves against?

Raven,

I would have loved to hear you talk more about CHT than Choose Democracy. While I agree with you that the organizations share similarities in their foundations, I think they are fundamentally different in focus and mission. While it is true that the folks at CHT discuss at length how social media and AI have divided us to the point that our democracy doesn’t function well, they don’t have a social justice mission, exactly, right? Again, I see the relationship, but the focus is different. I may not be totally clear about the focus from the particular image you posted, so I will say that. But CHT focuses on several domains that are negatively impacted by the way technology is utilized today.

I completely agree that social media has undergone significant changes. There are two problems that I wonder about with your example. The analysis suggests that 70 to 75% of social media content is AI-generated. It varies depending on the type of content, but it is a very different social media environment. So, one of the questions I always ask now is whether the images and videos I am seeing are real. Plus, the algorithm will continue to send content related to what you are watching, and it intentionally increases the intensity of that content as you get more. We will discuss several studies throughout the semester.

I agree that there is already harm, partly due to AI and partly to the inherent divisive nature of social media and its algorithms. I think CHT is trying to show the domains we need to be concerned about. If you listen to any of their videos, they talk about what is happening right now to make their point. I agree it is already a problem. I think the dilemma stakeholders face is finding ways to convey their message to the people in our country without coming across as alarmist. It may not be the correct strategy, but it’s a dilemma, nonetheless.

We will discuss the capacity of AI to accomplish amazing and positive things. That is the reason they don’t just “shut it all down.” While we must be wary, we must also know more about it. And as it begins to influence our profession, we will need to be as informed as possible, right?

Good post and good conversation.

Dr P