Discussion Post – Ethical OS Risk Zones in Social Work Practice

As technology becomes more central in social work practice, the Ethical OS Risk Zones remind us to think carefully about how digital tools affect our clients, our boundaries, and our ethical decision-making. Below, I discuss four risk zones and connect each one to two course topics or readings, explaining how each example reflects the risks we are navigating in our profession.

1. Risk Zone 2: Addiction & the Dopamine Economy

How this applies in social work:

Digital platforms are intentionally designed to keep users engaged. For vulnerable clients… especially youth, individuals with trauma histories, or people experiencing depression or anxiety—excessive engagement can worsen symptoms and impair daily functioning.

Course Connection #1 – Digital Transformation Article (Ronad, 2025)

In Ronad (2025), “Digital Transformation in Social Work,” the author discusses how technology is now deeply integrated into daily life, including for clients who rely heavily on digital environments. This creates both benefits and challenges. One challenge is how constant notifications, alerts, and app-based engagement trigger the dopamine system and reinforce compulsive use.

Why it fits the risk zone:

Clients may develop patterns of unhealthy technology dependence, and social workers must now assess digital habits as part of biopsychosocial assessments.

Course Connection #2 – Class Discussions on Youth & Social Media Habits

Throughout the semester, we talked about how young clients experience anxiety, mood changes, and body-image pressures tied to their digital consumption.

Why it fits the risk zone:

These conversations highlight how the dopamine-driven design of apps directly affects client mental health and requires social workers to incorporate digital literacy and coping strategies into treatment planning.

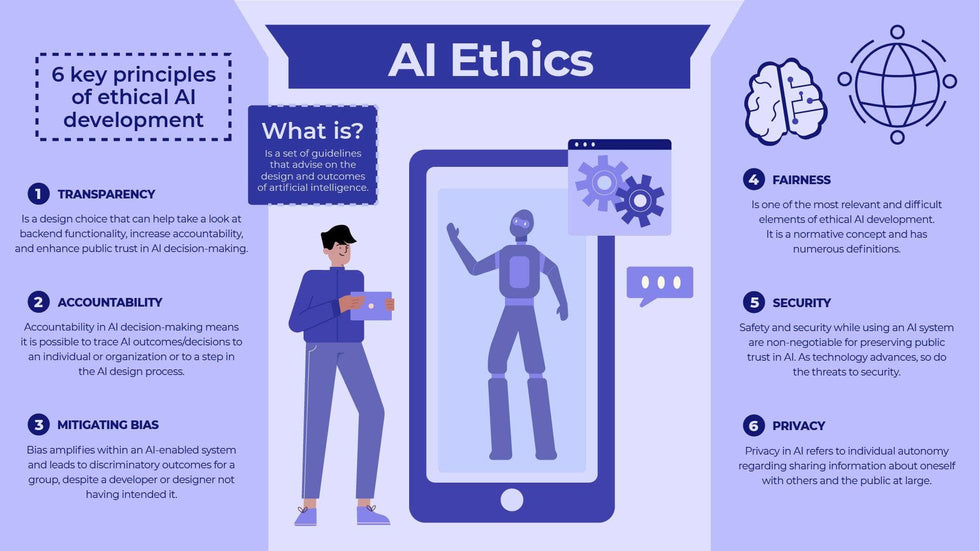

2. Risk Zone 4: Machine Ethics & Algorithmic Biases

How this applies in social work:

As AI becomes part of assessment tools, telehealth platforms, case management systems, and even risk-prediction algorithms, biases in these systems can produce unfair outcomes for marginalized groups.

Course Connection #1 – Reamer (2023), “Artificial Intelligence in Social Work: Emerging Ethical Issues”

Reamer emphasizes the ethical risks when social workers use AI-supported tools without understanding how algorithms make decisions.

Why it fits the risk zone:

Algorithms can reinforce racial, gender, and socioeconomic biases. If an AI used for mental health screening or risk assessment is trained on biased data, it may mislabel or unfairly categorize clients—especially Black, Brown, and low-income clients.

Course Connection #2 – Week 3 Reading on AI in CBT (Jiang et al., 2024)

The Jiang et al. (2024) article shows how AI is being integrated into CBT interventions. While this increases accessibility, it raises concerns about whether AI misinterprets cultural context, dialect, or emotion.

Why it fits the risk zone:

If AI programs cannot accurately read the emotional experiences of diverse clients, they could give harmful or inappropriate therapeutic suggestions.

3. Risk Zone 5: Surveillance State

How this applies in social work:

Technology used to “support” clients can also be used to monitor, track, and report on them in ways that damage trust, privacy, and safety.

Course Connection #1 – Discussion in Week 2 on Boundaries in the Digital Age

During our module on boundaries, we emphasized how digital communication, GPS tracking, telehealth platforms, and agency case systems store sensitive data.

Why it fits the risk zone:

Client information can be accessed, archived, or surveilled without clients fully understanding it. Social workers must balance safety and confidentiality with digital record-keeping requirements.

Course Connection #2 – Reamer’s (2023) Ethical Issues Article

Reamer discusses risks of “digital footprints” in service delivery and how agencies may unintentionally track client behavior.

Why it fits the risk zone:

This highlights how clients might be monitored through automated systems—sometimes without their explicit informed consent—creating ethical dilemmas about privacy, autonomy, and power.

4. Risk Zone 7: Implicit Trust & User Understanding

How this applies in social work:

Clients often trust technology (telehealth apps, AI chatbots, mental health tools) without understanding how their data is used or what limitations these tools have.

Course Connection #1 – Balogun et al. (2025), “Integrating Telehealth for Vulnerable Groups”

This reading shows how telehealth increases accessibility but also creates misunderstandings—clients may assume the technology is fully safe, private, and accurate.

Why it fits the risk zone:

Clients may not recognize that telehealth platforms collect data, may lack end-to-end security, or may experience glitches that affect treatment quality. Their “implicit trust” can place them at risk.

Course Connection #2 – Class Conversations on Client Education & Digital Literacy

We discussed the responsibility social workers have to educate clients about digital risks, privacy limitations, and how data is stored.

Why it fits the risk zone:

Clients often assume that digital tools are automatically safe or always correct. Social workers must actively teach clients how to use technology ethically and safely.

Conclusion

Across the semester, we learned that digital tools bring both benefits and profound ethical challenges. The Ethical OS framework helps us understand these risks and reminds us that as social workers we must be intentional, culturally aware, and critically reflective whenever technology becomes part of our practice. Whether it’s algorithmic bias, surveillance concerns, addictive design, or misplaced trust in digital tools, our profession must stay educated and advocate for ethical technology use to protect vulnerable clients

Lonique,

You clearly have a good understanding of the zones and the importance of these issues for the profession. And you also choose good articles that exemplify that zone and its impact. You could have translated your answers into paragraph format, used your thoughts and words to link them together and this would have been a stupendous post. You met the assignment, that isn’t a problem. I just wanted to suggest that a written answer that is more a narrative would have stronger and it would have given you a chance to communicate these issues in the format you will most likely be using in your professional life.

Dr P